Peregrinação – A live cinema approach for paper puppetry

Digital media as an interface between puppetry and film to enhance the traditional paper theater experience.

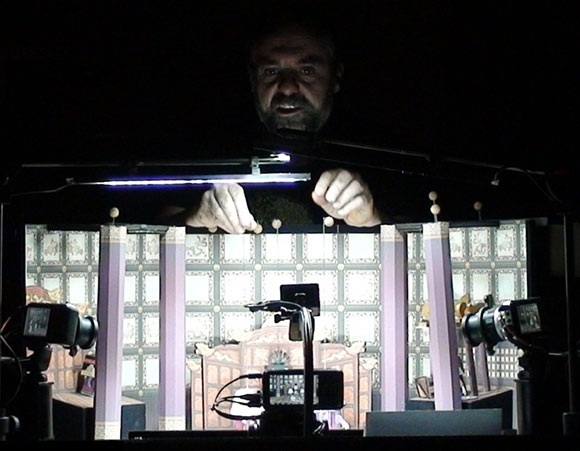

Traditional paper puppetry shows are usually presented in small places to a small audience because of their size. We present a miniaturized multi-camera studio set as a novel way to experience puppetry to a greater audience.

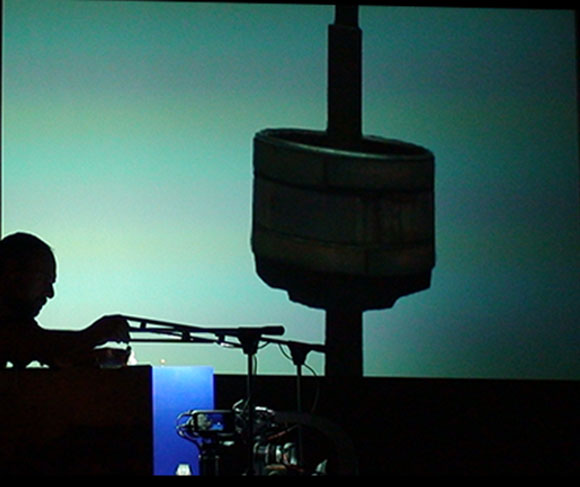

The puppeteer tells the story by performing with tangible puppets in a physical set and the audience can compare this performance with the final composed image in a screen as if they were behind the cameras.

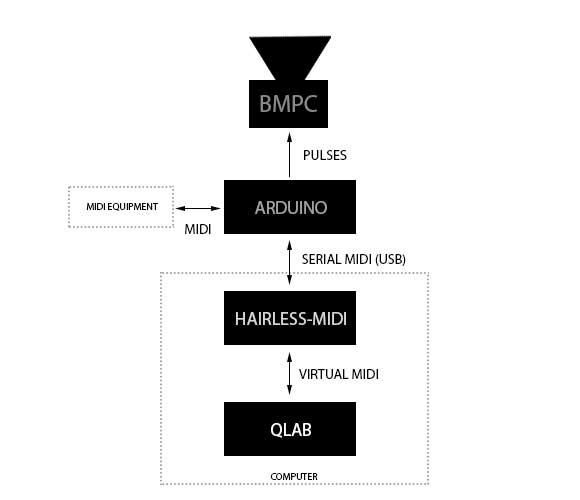

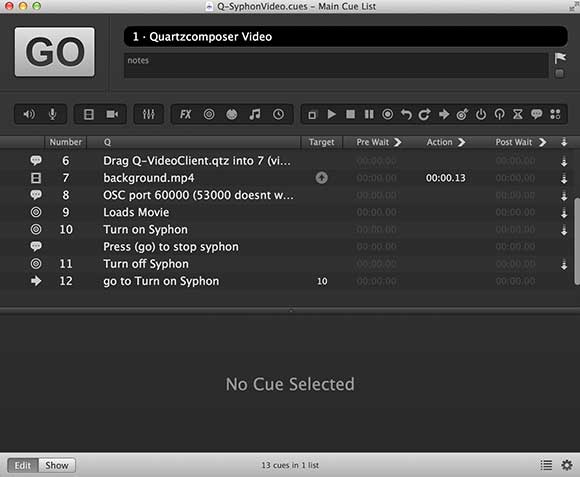

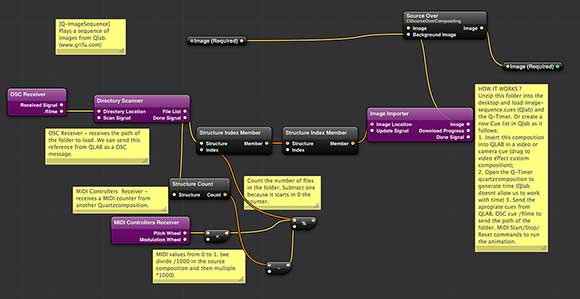

We have developed a multimedia solution based on virtual “strings” connecting computer applications, devices, and real-time effects providing all the control to one single puppeteer – the one man show.

We overcome traditional puppetry limitations by exploring digital media technology, and by mixing puppetry with cinematic techniques we present the experience of the live cinema.

Coproduction Lafontana – Animated Forms / Teatro Nacional São João (TNSJ) from the original text of Fernão Mendes Pinto, with the interpretation of Marcelo Lafontana.

Inspired by the adventures of Fernão Mendes Pinto, a portuguese explorer, reported from the book “Peregrinação” , published in 1614, Marcelo Lafontana makes a journey through strange stories that are presented in a miniature world.

Through the intersection of puppetry, in particular, the expressiveness of paper theater with the cinematic language, this small world becomes a large space of illusion in which the narrative arises.

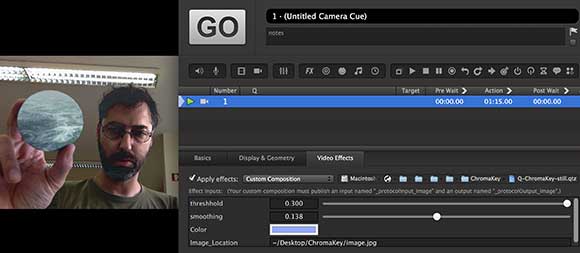

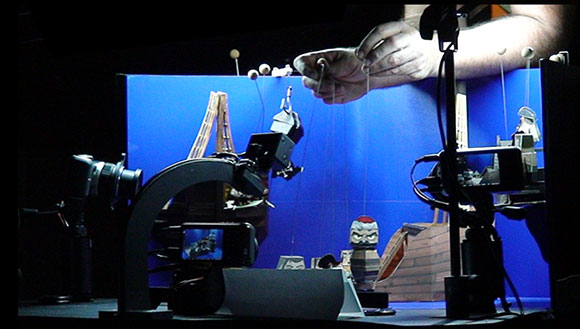

The stage, is transformed into a film studio, where scenarios and characters drawn and cut from cardboard are handle in front of video cameras. The images are captured by a multimedia architecture providing image processing, image mixing, sound effects, chroma key, performance animation, and all happens in real-time. The final result is projected on a screen, like a sail of a boat that opens to the charms of a journey through the imagination.