TheToyWorlds was evaluated at a junior school as part of the virtual marionette research.

Picture 1 – Childrens interacting with the game at the classroom.

Introduction

We challenged children under the ages of 5 and 6 to grab a virtual puppet and play with it.

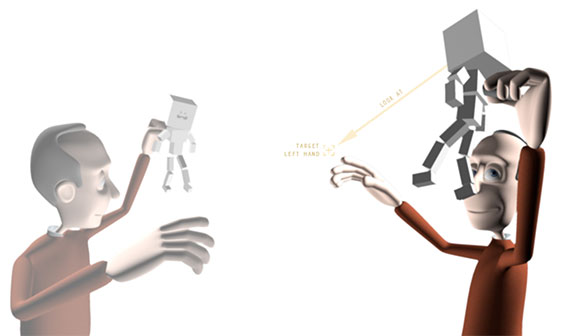

The main goal was to better understand how children under these ages who never had contact with digital interfaces based on body motion respond to the interaction. The ToyWorlds is a two player game where the player controls the virtual marionette with their hands. The left hand manipulates the body of the puppet and the head follows the right hand motion. The players grab the puppet with the left hand and move the target where the puppet is looking with the right hand. In this way we challenge the participants to control their own body as if it was a marionette controller. This experience is somehow near to how puppeteers manipulate with their puppets, using rods, strings, or other kind of controller.

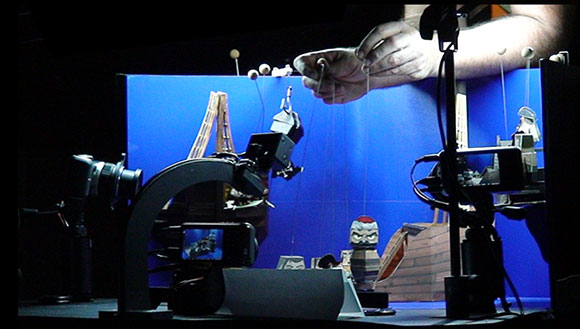

Picture 2 – Simulation of the ToyWorlds puppet manipulation using the two hands

In puppetry the head of the puppet is essencial to transmit emotions and to help the audience to understand the direction of the action or motion. By fowling the players right hand with their heads, the virtual puppets call the attention of the audience and a contribution to a more expressive animation. Although this is a very simple way to control virtual puppets by just using our two hands it is a very challenging interaction because our hands are not visually represented in the scene. In this way, we must calculate the position of our hands by the position of the puppet´s body and head target. And because the result does not mirror our body exact motion, we need the capacity to abstract from the real motion to the represented motion. This indirect manipulation creates a distance between the player and the puppet witch we want to understand. This is one important aspect we want to explore, study and measure.

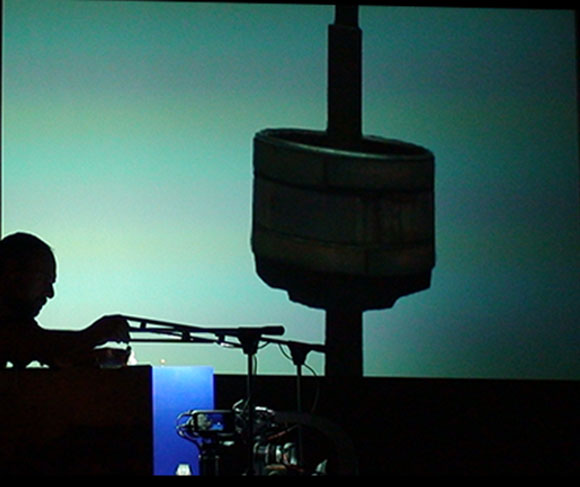

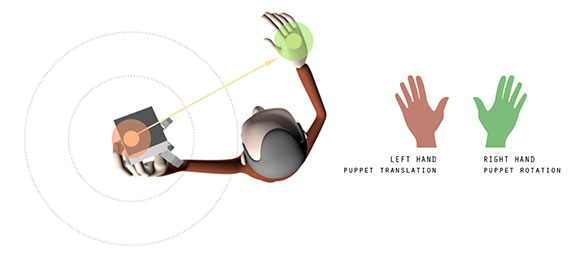

picture 3 – The right hand makes the puppet to rotate. The puppets head follows the players right hand forcing the body to rotate.

By assigning two different tasks to our two hands we challenge the players to take control of their bodies, an abstraction of the body which may be very difficult to childrens under 6 years old.

The virtual marionettes are rag-doll puppets, dynamically affected by gravity and collisions, the result is a very natural and rich animation.

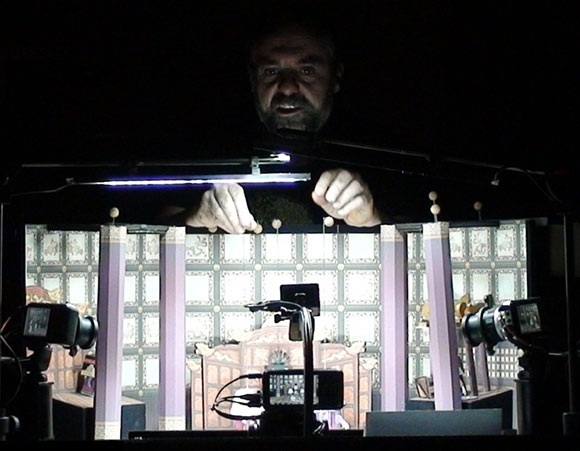

picture 4 – screenshot of the game

From inside out, the game narrative!

In Marta´s bedroom, there are strange things happening when she goes to sleep. She dreams with paper boxes that fill her room creating a big mess. Because she is a little lazy to put her bedroom in order, she created two little imaginary friends that help her to clean up. Joaquin wears a green shirt and his the uncle of António which wears a orange shirt, both are paper figures and very hard workers. They can only grab the boxes when they change their colors, Joaquim grabs the green boxes and António grabs the orange boxes. When the boxes change to the blue color, both puppets can pick them by just looking at them using their respective looking target square. Green and orange boxes are grabbed with the bodies of the puppets using the players left hand and the blue boxes are picked up with the looking target using the players right hand. After grabbing the boxes the player receives a score of one point to the green or orange boxes and ten points to the blue boxes. The boxes disappear if grabbed or return to their natural color after a certain time.

The players give life to this two little figures and have one minute and an half to grab the boxes. They start by making the calibration pose and the first to get their pose calibrated takes control of the first player witch is Joaquim.

This game evaluates body motor control, an important aspect for the puppetry manipulation.

In the table above we show the game results of this experiment. Two of the 22 children were not able to grab any color box because they felt stressed by the competition.

[table id=1 /]