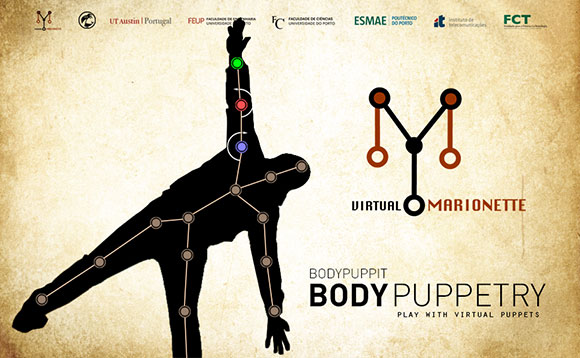

Shape Your Body is a multi-platform digital puppetry application that allows to manipulate silhouette puppets with the body. It makes use of low-cost motion capture technology (Microsoft Kinect) to provide an interactive environment for performance animation. It challenge the user to explore his body as marionette controller, in this way, we get to know a little better our body.

This project brings the art of shadow puppetry into the digital performance animation.

Shadow theatre is an ancient art form that brings life to inanimate figures. Shadow puppetry is great environment for storytelling. Thus, our objective was to build a digital puppetry tool to be used with non expert-artists to create expressive virtual shadow plays using body motion. A framework was deployed based on Microsoft Kinect using OpenNI and Unity to animate in real-time a silhouette. We challenge the participants to play and search the most adequate body movement for each character.

Requirements to run this application

– PC / Mac computer

– OpenNI drivers version 1.5 (you can find the Zigfu drivers above)

– Microsoft Kinect sensor

[wpdm_package id=”1558″]

[wpdm_package id=”1559″]

This project was published at ACM CHI 2012

You can download the article

[wpdm_package id=”1414″]

Video

[vimeo 37128614 w=580&h=326]